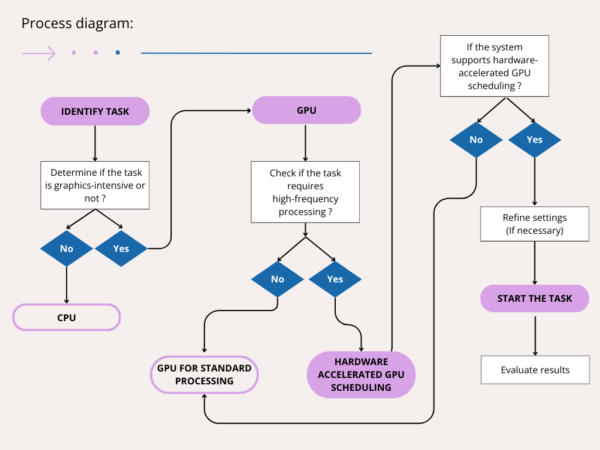

For professionals working in 3D design, animation, and machine learning, the ability to process lots of data efficiently is crucial. GPU acceleration has been a big help in improving performance by taking over compute-intensive tasks from the CPU to the GPU.

This makes processing fast for graphics-intensive data and lets the CPU do other jobs. With the new technology of hardware-accelerated GPU scheduling, there’s an extra step that can make things even better.

This technology improves how the CPU and GPU work together, reducing wait times and speeding up the system. Understanding these technologies is vital for our target audience, as it can significantly affect the efficiency and quality of their work.

GPU Acceleration Explained

In short, GPU acceleration is the employment of a graphics processing unit (GPU) along with the computer processing unit (CPU). This method is especially beneficial when the CPU is too slow or engaged with other tasks. GPU handles these types of operations more effectively.

By using the parallel processing abilities of modern GPUs, professionals in 3D rendering, graphic design, and similar areas can experience significant performance enhancements. GPUs are adept at managing the high-frequency tasks associated with rendering complex scenes, processing large textures, and handling frame data, which are common in their field of work.

What is Hardware-Accelerated GPU Scheduling

The hardware-accelerated GPU scheduling feature is introduced in recent updates to the Windows OS specifically for the Windows Display Driver Model (WDDM). This feature allows the GPU to manage its own memory and queue tasks directly, which can assist in reducing input latency and boost performance.

Unlike traditional GPU scheduling, where the CPU plays a significant role in managing and directing tasks to the GPU, hardware-accelerated GPU scheduling enables the GPU to assume more responsibility for scheduling its work. This feature leads to a more efficient allocation of tasks, as the GPU scheduler handles the specific needs of graphics-intensive data.

For users, this means smoother rendering experiences, quicker turnaround times for complex projects, and an overall more responsive system when working with 3D rendering software and other demanding applications.

The Role of the GPU Scheduler

The GPU scheduler is a component of the WDDM that plays a crucial role in managing how tasks are distributed and executed on the GPU. It controls how frames and graphics-intensive data are processed, ensuring that high-priority tasks are processed quickly.

The scheduler also helps hide scheduling costs by efficiently grouping tasks, which can lead to improved performance. With hardware-accelerated GPU scheduling, the GPU scheduler gains more control, allowing it to offload high-frequency tasks from the CPU, thereby reducing the effort required to switch between them.

This option is particularly beneficial for users, as it means that their dedicated graphics hardware can operate with greater efficiency, allowing them to focus on the creative aspects of their work without being hindered by technical limitations.

Understanding the Windows Display Driver Model

The Windows Display Driver Model (WDDM) is an architectural framework provided by Microsoft that allows for improved graphics performance and reliability on Windows operating systems. It is the system that lets the operating system and the graphics hardware talk to each other for better graphics performance and reliability on Windows.

WDDM plays a critical role in the application of hardware-accelerated GPU scheduling, as it defines how the GPU scheduler operates within the system. The model supports various graphics operations, including those required for 3D rendering, video playback, and gaming.

Understanding WDDM is crucial because it directly affects how graphics drivers interact with the system and how efficiently applications can utilize the underlying hardware. With the latest updates to WDDM, users can expect significant enhancements in how their GPU engines manage frame buffering and scheduling, leading to a more optimized and responsive experience when working with graphics-intensive data.

Does Hardware Scheduling Affect Rendering?

The impact of hardware-accelerated GPU scheduling on GPU rendering processes is critical. Rendering is a resource-intensive task that can benefit from any improvements in efficiency and performance.

With hardware scheduling, the GPU can better manage the rendering pipeline, potentially leading to faster completion times and the ability to handle more complex scenes. The real impact can vary depending on the specific software and hardware configuration.

Impact on 3D Rendering and Animation

Regarding 3D rendering and animation, hardware scheduling can have a noticeable impact. The GPU can focus more on rendering by minimizing the overhead connected with task management. This will result in a smoother and more effective workflow.

The above is especially relevant for animation studios and 3D artists who need a responsive system of GPU servers that keeps pace with their frequent design processes. The capacity to rapidly render and review changes can enhance the creative process and decrease the time for completion of projects.

Relevance for Machine Learning and Deep Learning Tasks

Machine learning and deep learning developers also benefit from hardware-accelerated GPU scheduling. These fields often involve training complex models that require significant computational power.

By optimizing how they schedule tasks on the GPU, developers can reduce the time it takes to train models, leading to more efficient experimentation and faster deployment of machine learning solutions. The reduced input latency and improved performance can also enhance the experience when working with interactive machine-learning applications.

Requirements to Use GPU Hardware Scheduling

Your system needs to meet specific requirements to use hardware-accelerated GPU scheduling. First, the feature is only accessible on editions of the Windows operating system that support the latest WDDM. Unfortunately, not all GPUs can use this feature; it requires a compatible GPU consistent with the necessary driver updates.

For professionals in the 3D rendering and computing fields, ensuring their systems satisfy these is crucial to harnessing the full potential of hardware scheduling.

Consider installing the latest drivers from the GPU manufacturer, as they work seamlessly with the new scheduler. By satisfying these requirements, you can experience reduced input latency and enhanced performance, which are crucial for tasks that demand real-time interaction and rapid feedback, such as 3D modeling and animation.

Checking for Supported GPU and Driver Updates

To determine whether your system can utilize hardware-accelerated GPU scheduling, you must first check if your GPU is supported. How to do that – visit the GPU manufacturer’s website and review the list of compatible models.

Once confirmed, the next step is to check if you have installed the latest graphics drivers. These drivers are crucial as they include the necessary updates to enable hardware scheduling. You can typically find driver updates through the manufacturer’s website or the device manager on your system. Always keep the drivers up-to-date to access new features like hardware scheduling and maintain optimal performance and system security.

Regular driver updates can also bring improvements and bug fixes that can enhance the overall stability of your GPU-intensive tasks. In the following sections, we will continue to explore the pros and cons of using GPU hardware scheduling, how to turn it on or off, and its impact on rendering and other compute-heavy tasks.

Pros and Cons of Using GPU Hardware Scheduling

Like any other innovative approach, the GPU scheduling comes with its own advantages and limitations.

In the below lines, we will review the key pros and cons of this technology, so you can understand its potential impact over your projects.

Benefits of Reduced Latency and Improved Performance

The professional computing community met the introduction of hardware-accelerated GPU scheduling with enthusiasm and skepticism. On one hand, it promises to reduce latency and improve performance, mainly when the GPU handles graphics-intensive data. This improvement can lead to a more fluid and responsive user experience, particularly beneficial for those engaged in real-time applications such as gaming or interactive 3D modeling.

On the other hand, there are potential downsides to consider. For instance, older GPUs may not support this new feature, and even with a supported GPU, there might be compatibility issues with specific applications.

Additionally, while the new scheduler reduces input lag, it may also introduce new complexities in system configuration that could deter less tech-savvy users.

Considerations and Potential Downsides

Despite the advantages, there are considerations that users must be aware of before enabling hardware scheduling. Some users have reported that the feature does not always result in noticeable performance gains and, in some cases – might even lead to stability issues.

This problem is particularly true for older GPU models that may not fully support the new scheduler. Furthermore, the process of activating hardware scheduling involves modifying default graphics settings, which might be discouraging for users who are not familiar with adjusting system configurations.

It’s also significant to note that while the scheduler reduces input lag, it may not be appropriate for all kinds of workloads, and users should assess whether the advantages outweigh the possible risks for their particular use cases. Considering these factors, you can make a well-informed choice whether to implement this feature in your computing environment.

How to Enable or Disable Hardware Scheduling

In this section, you’ll get guidance on how to change default graphics settings. For Windows users interested in taking advantage of hardware-accelerated GPU scheduling, enabling or disabling is relatively straightforward.

The setting can be done through the settings app or, for more advanced users, through the registry editor, which requires changing UAC (user account control) as it is a safety feature on Windows. Enabling this feature allows the GPU scheduling processor to take control of prioritization and handling quanta management. This will lead to a more efficient distribution of tasks and improving performance for graphics-intensive tasks.

Using the Settings App

Hardware scheduling can be enabled via the settings of the Windows OS.

In order to enable it, perform the following:

- Access the Settings on your Windows system.

- Under the System category, click on Graphics settings.

There, you will find the option to turn on hardware-accelerated GPU scheduling. This change in the default graphics settings is user-friendly and does not require advanced technical knowledge. After making related settings, users may need to restart their system for the changes to take effect.

Advanced Methods: Registry Editor

For users who prefer a more hands-on approach or need to troubleshoot issues related to hardware scheduling, the registry editor can be used. By running the regedit command in the run dialog, users can access the Windows registry and make more granular changes to how the GPU scheduler operates. To achieve this, press the Windows key on your keyboard and navigate to Run, from where you can enter the registry command.

If the workstation has a multi-GPU configuration, pay attention to the selected display – you should make the changes for every display. Failing to manage this may increase input latency due to the asynchronous operations of the various adapters, so use this method with caution.

Making incorrect changes to the registry can cause your system to become unstable. We recommend that experienced quanta management and control prioritization users try this approach. Remember to always back up your registry before making any adjustments.

Hardware Accelerated GPU Scheduling vs. GPU Acceleration

While hardware-accelerated GPU scheduling and GPU acceleration are connected, they are not identical. The former is a specific feature that optimizes how tasks are organized and executed on the GPU. At the same time, the latter refers to transferring specific tasks to the GPU to improve performance.

For professionals who depend on advanced technology, it is better to understand the difference between them to handle their substantial workloads.

Comparing Scheduling Costs and Performance Impact

When comparing hardware-accelerated GPU scheduling to traditional GPU acceleration, one must consider the scheduling costs associated with each. The new scheduler aims to hide scheduling costs by allowing the GPU to manage its task queue, which can lead to performance improvements.

However, this does not mean that the GPU is doing less work. Instead, it’s working more efficiently, doing the same tasks faster. As a result, applications may run more smoothly because the GPU can balance the workload better and reduce input latency.

Context Switching and Quanta Management

Another technical aspect to consider is context switching and quanta management. In traditional GPU acceleration, the CPU is heavily involved in managing the context switching between tasks, which can introduce latency.

With hardware-accelerated GPU scheduling, the GPU takes on more of this responsibility, which can lead to a more streamlined process. Quanta management, or the handling of task execution time slices, is also optimized, as the GPU scheduler can allocate quanta more effectively based on the needs of each task. This result is particularly beneficial for tasks that require a high-priority thread running continuously, such as real-time simulations or complex rendering jobs.

Conclusion: Should You Use Hardware-accelerated GPU Scheduling

For professionals in 3D design, animation, and machine learning, deciding to use this feature should be based on a clear understanding of their specific needs and system capabilities. While the promise of reduced input latency and improved performance is enticing, it’s essential to weigh these advantages against the compatibility and stability of your current setup.

Hardware-accelerated GPU scheduling can be a valuable tool for those with the necessary hardware and a desire to stay on the cutting edge of performance optimization. It can improve the rendering and gaming experience, enhance the responsiveness of creative applications, and accelerate the training of machine learning models.

However, suppose you have an older GPU or value system stability greater than marginal performance improvements. In that case, using traditional GPU acceleration methods might be wise.

Sources:

- Windows Display Driver Model (WDDM) – Microsoft Docs

- Hardware-Accelerated GPU Scheduling – NVIDIA

- AMD Radeon Software Updates

- Intel Graphics – Windows DCH Drivers

- Hardware-Accelerated GPU Scheduling Analysis – TechSpot

- Hardware-Accelerated GPU Scheduling Explained – PC Gamer

- Hardware-Accelerated GPU Scheduling Performance – Tom’s Hardware

Frequently Asked Questions:

-

How does hardware scheduling interact with dedicated graphics hardware?

Hardware scheduling works seamlessly with dedicated graphics hardware, allowing the GPU to manage its task queue and prioritize operations more effectively. GPU scheduling can lead to more efficient resource use and improved performance for tasks that rely heavily on the GPU.

-

Can hardware scheduling be used in conjunction with cloud Render nodes?

Yes, hardware scheduling can be beneficial when used with cloud render nodes, as it can help optimize the rendering process by reducing input lag and improving task management efficiency on the GPU.

-

What are the long-term benefits of using hardware scheduling for heavy computing tasks?

The long-term benefits of using hardware scheduling for heavy computing tasks include more consistent performance, the ability to handle more complex workloads, and potentially reduced rendering times, all of which contribute to a more efficient and productive workflow.