Machine learning is crucial for businesses, researchers, and developers in various industries. It’s a part of AI that focuses on creating systems that learn from data. Unlike regular software, machine learning algorithms use past data to predict new values.

The power of machine learning lies not only in algorithms but also in supporting infrastructure. Here’s where a machine learning server steps in. It’s not just any server; it’s a powerhouse handling huge computational needs for training ML models. These servers have special hardware speeding up the processes and enabling complex tasks.

For professionals in AI, the importance of machine learning extends beyond simple curiosity. Machine learning servers provide a route to improve rendering processes, automate regular tasks, and produce insights from vast datasets. These servers are the foundation of their computational infrastructure for experts and developers, allowing them to test, refine, and deploy machine learning solutions at scale.

Therefore, understanding the role and necessities of a machine learning server is essential for anyone aiming to utilize its full capabilities.

What is Machine Learning?

Machine learning automates data analysis by constructing analytical models. It’s an AI division where systems learn from data to make choices. Algorithms in machine learning learn from data to make forecasts. These algorithms improve accuracy over time without direct programming.

Machine learning splits into three main learning categories:

- Supervised: The supervised learning educates models on labeled data.

- Unsupervised: The unsupervised learning trains models on data without labels.

- Reinforcement learning: Reinforcement learning teaches agents to act in environments.

Machine learning’s power lies in algorithms and resources for training models. Deep learning models require substantial computational power, met by machine learning servers. These servers offer hardware and software for training, testing, and deploying models. They feature high-performance CPUs, GPUs, memory, and storage for data. Additionally, they include specialized software like TensorFlow and PyTorch.

Machine learning is groundbreaking, transforming problem-solving in various sectors. Understanding algorithms and computational infrastructure is crucial for utilizing machine learning. Machine learning servers are essential, as they provide hardware and software for effective model implementation.

Machine Learning Server Hardware Specifications

When exploring machine learning (ML) and deep learning (DL), robust hardware is crucial. The correct machine learning server hardware specifications are critical for anyone in data science aiming to deploy solutions that are not only efficient but also scalable.

This section aims to demystify the hardware requirements for a machine learning server, focusing on the key features that distinguish these powerhouses.

Key Features of a Machine Learning Server

The key features of a server optimized for machine learning include high-performance GPUs, such as those offered by Nvidia, which are crucial for accelerating deep learning training processes.

Another critical component is the server’s CPU, which should be capable of handling multiple threads simultaneously. This capability is vital for data preparation, often requiring significant computational resources. The server’s memory (RAM) also plays a crucial role, as it needs to be sufficient to store large datasets and the intermediate data generated during the machine learning process. High-speed storage, preferably enterprise NVMEs, is recommended for quick data retrieval and storage operations.

Python support is a non-negotiable feature for a machine learning server. Given Python’s dominance in the data science community, a server must be able to run Python scripts efficiently. This includes support for various machine learning and deep learning libraries, such as TensorFlow and PyTorch, which are instrumental in developing and training models.

Server Grid and Data Center Solutions

A server grid or data center solutions become indispensable for projects requiring immense computational power. These setups offer the infrastructure and services necessary for large-scale machine learning and deep learning projects.

A server grid allows for the distribution of computational tasks across multiple servers. This way, it enhances the capabilities of individual machines and ensures that complex machine-learning tasks can be completed promptly.

Data centers, on the other hand, provide a centralized location where machine learning servers are housed. These facilities are equipped with advanced cooling systems, backup power supplies, and high-speed internet connections, all of which are crucial for maintaining the performance and reliability of machine learning servers.

The data center environment ensures servers remain operational around the clock, allowing machine learning models to be continuously trained without interruption.

Client Workstation and Web Service Integration

Machine learning servers are not a standalone thing. They often need to integrate with client workstations and web services. This integration allows users to pull data from various sources, deploy models directly from their computers, and even offer machine learning solutions as a web service.

The ability to connect to a client workstation seamlessly is crucial for data scientists and machine learning engineers, as it enables them to initiate remote sessions, access computational resources, and start training models without physical access to the server.

Web service integration is equally important, as it allows machine learning models to be deployed as services accessed over the internet. This capability is essential for businesses incorporating machine learning into their business applications.

By deploying models as web services, organizations can leverage machine learning solutions without needing complex infrastructure on the client side.

Benefits of Using a Machine Learning Server for Complex Tasks

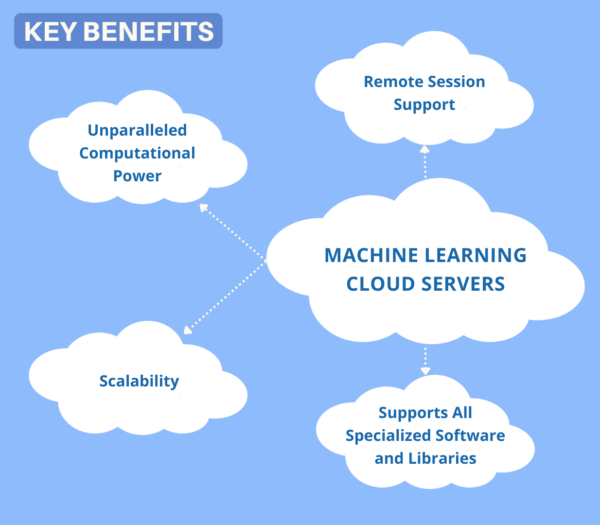

The deployment of machine learning servers in complex computational tasks offers many benefits. These servers, optimized for high-demand tasks, bring advantages that are indispensable for professionals in data science, deep learning, and related fields.

One of the primary benefits of utilizing a machine learning server is its unparalleled computational power. It has components specifically designed to handle heavy machine learning tasks, such as Nvidia GPUs. These servers can process and analyze vast datasets significantly faster than traditional computing systems. This capability is crucial for deep learning training, where the volume and complexity of data require substantial computational resources.

Also, machine learning servers offer scalability, which is essential for various projects. Whether small or large, these servers can adapt to meet project needs. This flexibility optimizes resource usage, reducing costs while maintaining performance.

Another significant advantage is the support for remote sessions, which permits users to access the server’s computational resources from any location. This feature is especially beneficial for teams working on machine learning projects across various geographies, enabling cooperation and sharing of resources without the necessity for physical closeness to the server.

Machine learning servers have essential software and libraries for creating models. Python, TensorFlow, and PyTorch support is included for easier development and deployment. These tools help data scientists focus on improving models without software issues. Various Python samples can be found online to start the process.

Machine Learning Use Cases

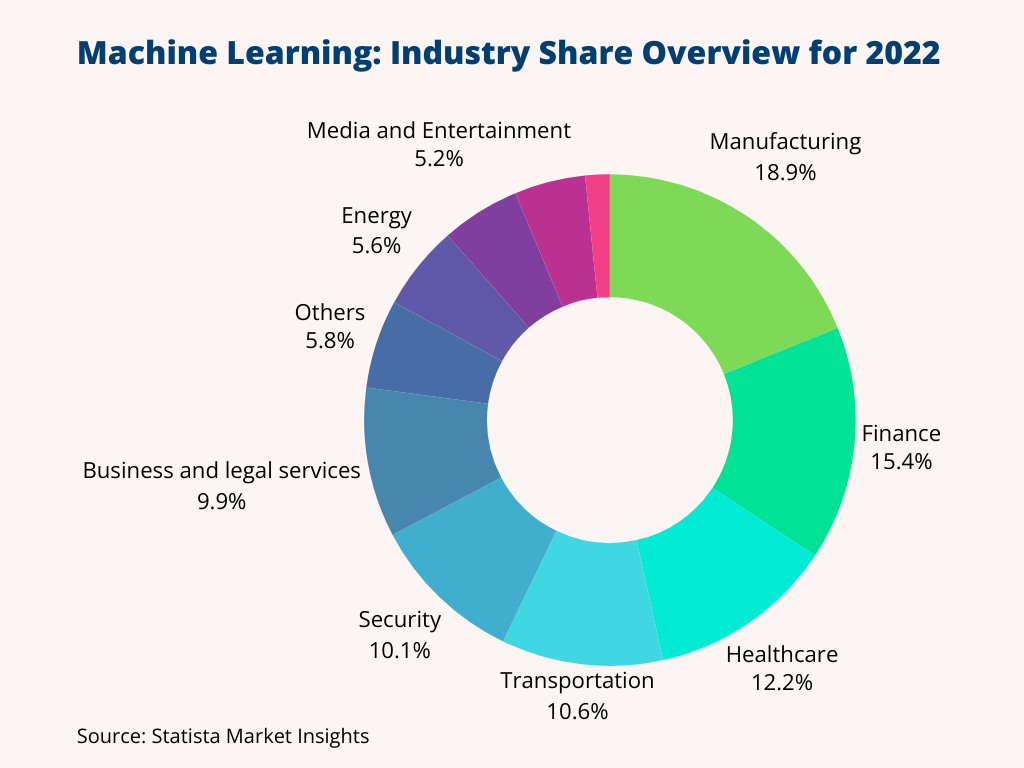

The versatility of machine learning servers extends to a wide range of use cases across various industries. From healthcare to finance, machine learning applications are reshaping how organizations approach problem-solving and decision-making.

Machine learning servers analyze medical images, predict patient outcomes, and personalize treatment plans in healthcare. Their computational power enables the processing of large datasets of medical images, facilitating the early detection of diseases such as cancer. Machine learning models can also analyze patient data to predict health outcomes, helping doctors make informed decisions about treatment options.

In the financial sector, machine learning servers play a vital role in fraud detection, risk management, and algorithmic trading. By examining transaction data, machine learning models can detect patterns indicative of fraudulent activity, aiding financial institutions to prevent losses.

Likewise, these models can evaluate risk levels associated with loans and investments, allowing more precise decision-making. Algorithmic trading algorithms, powered by machine learning servers, can scrutinize market data in real time to make trading decisions, optimizing profits while reducing risks.

Machine learning servers are used in marketing for customer segmentation and recommendation systems. Customer data analysis helps identify distinct segments for customized marketing strategies. Recommendation systems suggest products based on customer preferences and behavior. Sentiment analysis gauges public sentiment towards brands for marketing insights.

In cloud rendering and design, machine learning servers can automate the creation of realistic models and textures, improving rendering algorithms to generate high-quality visuals in less time. This application is especially advantageous for professionals in 3D design, architecture, and animation, where the need for high-quality visuals is steadily growing.

The advantages of using a machine learning server for complex tasks are numerous, providing substantial benefits in computational strength, scalability, and adaptability. The broad spectrum of applications across various industries underlines the transformative capacity of machine learning, driven by the capabilities of dedicated servers.

As machine learning progresses, the role of these servers in facilitating and advancing machine learning solutions will only grow more crucial, underscoring the significance of comprehending and utilizing this technology for upcoming innovations.

Data Preparation and Remote Sessions

Data preparation is often cited as one of the most time-consuming aspects of machine learning. It involves cleaning, structuring, and enriching raw data to make it suitable for training models.

The complexity of data preparation increases with the volume and variety of data, requiring significant computational resources and expertise. Remote sessions, while enabling access to machine learning servers from any location, also pose network stability and security challenges. Ensuring secure and efficient remote access is paramount to safeguarding sensitive data and maintaining machine learning tasks’ integrity.

Deploying Models and Generative AI

Deploying machine learning models into production environments presents its own set of challenges. Ensuring that models perform as expected under different conditions requires thorough testing and monitoring. Additionally, the emergence of generative AI, which can create new, synthetic data that resembles real data, raises ethical concerns.

The computational demands of training generative AI models are substantial, necessitating powerful machine learning servers and careful consideration of the ethical implications of their use.

How to Set Up a Machine Learning Server

Setting up a machine learning server involves several key steps, mostly related to installing and selecting the appropriate software for the remote server. Cloud providers offer pre-assembled servers on which users can choose the operating system.

After this, it’s up to the user to install the needed software. Then, if more than one server is required, the system is cloned onto the next server, and so on. The process begins with identifying the specific requirements of the machine learning tasks, including computational power, memory, storage, and network capabilities. Selecting hardware components that meet these requirements is crucial.

Once this is done, the learning process may start. Whether more computational need arises, the user can clone the preconfigured software on the next server, and so on.

Conclusion: Stay Competitive with Machine Learning Servers

Machine learning servers are at the heart of modern AI applications. They provide the essential power for complex data processing and model training. Servers for machine learning enable businesses and researchers to turn large datasets into beneficial insights and innovative solutions. This process allows them to make informed decisions and take action with confidence. Organizations can scale their machine-learning projects efficiently and effectively by investing in the proper server infrastructure. As technology advances, machine learning servers will play an increasingly important role in driving future progress.

Sources:

Frequently Asked Questions:

-

Do you need a server for AI?

Due to their high computational and data handling requirements, AI and machine learning tasks often require a dedicated server.

-

What system is required for machine learning?

A system with high-performance CPUs, GPUs (preferably Nvidia), ample memory, and high-speed storage is required for effective machine learning.

-

What is a server in machine learning?

A machine learning server is a powerful computer equipped with specialized hardware and software designed to handle the computational demands of training machine learning models.

-

How do I set up a machine-learning server?

Setting up involves selecting the server from the cloud that covers the requirements for the training that has to be performed. After that, machine learning libraries and frameworks will be configured.

-

Can you run AI from a server?

AI and machine learning models can be run from a dedicated server, providing the necessary computational resources for complex tasks.